to multimedia data.

Answer:

- Repetitive Sequence Suppression.

- Run-length Encoding.

- Pattern Substitution.

Run-length Encoding : 1 from Computer graphics generated images, Faxes, part of JPEG (latter stage) pipeline.

Pattern Substitution : 1 from Pattern recognition/token substitution, Entropy coding (Huffman), LZW/GIF, vector quantization.

Question. What is the basic concept used in defining an Information Theoretic approach to data compression?

Answer:

The entropy of an information source S, defined as:

,

is the basis Information Theoretic compression algorithms.

Question: What advantages does the arithmetic coding algorithm offer over Huffman coding algorithm with respect to data compression?

Answer:

- Good compression ratio (better than Huffman coding), entropy around the Shannon Ideal value. – Huffman coding uses an integer number of bits for each symbol,

- hence k is never less than 1.

- – Use decimal number of bits

- Memory: potentially large symbol tables needed.

- Speed due possibly complex computations due to large symbol tables.

Question: Given the following Differential Pulse Code Modulated (DPCM) Sequence reconstruct the original signal.

+4 + 2 + 3 - 2 + 3 - 1 + 1 + 1

Answer:

DPCM decoding: Simply start with accumulator zero for each number add the value to current accumulator, output accumulator value.

So solution is:

4 6 9 7 10 9 10 11

Question: Given the following Run Length Encoded (RLE) Sequence reconstruct the

original 2D 8x8 (binary) data array.

(0, 8),

(0, 1), (1, 1), (0, 4), (1, 1), (0, 1),

(0, 1), (1, 2), (0, 2), (1, 2), (0, 1),

(0, 1), (1, 6), (0, 1),

(0, 2), (1, 4), (0, 2),

(0, 3), (1, 2), (0, 3),

(0, 2), (1, 1), (0, 2), (1, 1), (0, 2),

(0, 1), (1, 1), (0, 4), (1, 1), (0, 1)

Answer:

The format of RLE is for each pair (colour, length) so just ‘parse’ each row, to

expand colour to length number of values to get the solution:

Question: What are the key differences between the JPEG and MPEG I-Frame compression

pipeline?

Answer:

Four main differences:

- JPEG uses YIQ whilst MPEG use YUV (YCrCb) colour space.

- MPEG used larger block size DCT windows 16 even 32 as opposed to JPEG’s 8.

- Different quantization— MPEG usually uses a constant quantizationvalue.

- Only Discrete Pulse Code Modulation (DPCM) on DC component in JPEG on zig zag scan. AC (JEPG) and complete zig zag scan get RLE.

Question: What processes give rise to the lossy nature of JPEG/MPEG video compression?

Answer:

Lossy steps:

- Colour space subsampling in IQ or UV components.

- Quantization reduces bits needed for DCT components.

Question:

(A) In MPEG audio compression, what is frequency masking?

Answer:

When an audio signal consists of multiple frequencies the sensitivity of the ear changes with the relative amplitude of the signals. If the frequencies are close and the amplitude of one is less than the other close frequency then the second frequency may not be heard.

(B) Briefly describe the cause of frequency masking in the human auditory system?

Answer:

Frequency Masking:

- Stereocilia in inner ear get excited as fluid pressure waves flow over them.

- Stereocilia of different length and tightness on Basilar membrane so resonate in sympathy to different frequencies of fluid waves (banks of stereocilia at each frequency band).

- Stereocilia already excited by a frequency cannot be further excited by a lower amplitude near frequency wave.

(C) In MPEG audio compression, what is temporal masking?

Answer:

After the ear hears a loud sound, consisting of multiple frequencies, it takes a further short while before it can hear a quieter sound close in frequency.

(D) Briefly describe the cause of temporal masking in the human auditory system?

Answer:

- (Like frequency masking) Stereocilia in inner ear get excited as fluid pressure waves flow over them and respond to different frequencies.

- Stereocilia already excited by a certain frequency will take a while to return to rest state, as inner ear is a closed fluid chamber and pressure waves will eventually dampen down.

- Similar to frequency masking Stereocilia in a ’dampening state’ may not respond to a a lower amplitude near frequency wave.

(E) Briefly describe, using a suitable diagram if necessary, the MPEG-1 audio compression algorithm, outlining how frequency masking and temporal masking are encoded.

Answer:

MPEG audio compression basically works by:

- Dividing the audio signal up into a set of frequency subbands (Filtering)

- Use filter banks to achieve this.

- Subbands approximate critical bands.

- Each band quantised according to the audibility of quantization noise.

Frequency masking and temporal masking are encoded by:

Frequency Masking: MPEG Audio encodes this by quantising each filter bank with adaptive values from neighbouring bands energy, defined by a look up table.

Temporal Masking: Not so easy to model as frequency masking. MP3 achieves this with a 50% overlap between successive transform windows gives window sizes of 36 or 12 and applies basic frequency masking as above.

Question: Construct coding tree for ROADROADIES by Shannon-Fano Algorithm and calculate the entropy. Get the Compression Ratio also for the same.

Answer:

Shannon-Fano Algorithm:

log2(1/pi) is calculated as:

Ex: For symbol R:

pi=Count/total number of counts = 2/11=0.18181

log2(1/0.18181) = 2.459 approx.

Code is calculated as:

Ex: Traversing the tree from top to down.

No. of bits used is calculated as:

Ex: For symbol R:> count*code[number of bits/digits]= 2*1=2

For symbol I:> count*code[number of bits]= 1*5=5

Answer:

Shannon-Fano Algorithm:

- Sort the symbols according to the frequency count of their occurrences.

- Recursively divide the symbols into two parts, each with approximately the same number of counts, until all the parts contain only 1 symbol.

- Below are the letters with their occurrence counts.[occurrence counts should be in descending form]

Ex: For symbol R:

pi=Count/total number of counts = 2/11=0.18181

log2(1/0.18181) = 2.459 approx.

Code is calculated as:

Ex: Traversing the tree from top to down.

No. of bits used is calculated as:

Ex: For symbol R:> count*code[number of bits/digits]= 2*1=2

For symbol I:> count*code[number of bits]= 1*5=5

Calculate Entropy:

Hence, 2.7312 number of bits are required to represent each symbol. [It can be asked as, What is the average number of bits needed for each character?]

Compression Ratio: This can be found as:

Default ASCII encoding: 8 bits per symbol

Result after Shannon Fano Coding: 2.7312 bits per symbol

Compression Ratio = (11*8)/(11*2.7312) = 2.929

=2.7312

Compression Ratio: This can be found as:

Default ASCII encoding: 8 bits per symbol

Result after Shannon Fano Coding: 2.7312 bits per symbol

Compression Ratio = (11*8)/(11*2.7312) = 2.929

Question: Construct Huffman tree to encode the table below. What is the average number of bits needed for each character?

Symbol | A | B | C | D | E | F | G |

Frequency | 28 | 10 | 20 | 13 | 5 | 15 | 9 |

Answer: Huffman Coding: Huffman coding is a lossless data compression algorithm. The most frequent character gets the smallest code and the least frequent character gets the largest code.

Step 1. Build a min heap that contains 7 nodes where each node represents root of a tree with single node.

Symbol Frequency

E 5

G 9

B 10

D 13

F 15

C 20

A 28

Step 2. Extract two minimum frequency nodes from min heap. Add a new internal node with frequency 5 + 9 = 14.

Now min heap contains 6 nodes where 5 nodes are roots of trees with single element each, and one heap node is root of tree with 3 elements

B 10

D 13

Node 14

F 15

C 20

A 28Step 3: Extract two minimum frequency nodes from heap. Add a new internal node with frequency 10 + 13 = 23

Now min heap contains 5 nodes where 3 nodes are roots of trees with single element each, and two heap nodes are root of tree with more than one nodes.

Node 14

F 15

C 20

Node 23

A 28Step 4: Extract two minimum frequency nodes. Add a new internal node with frequency 14 + 15 = 29

C 20

Node 23

A 28

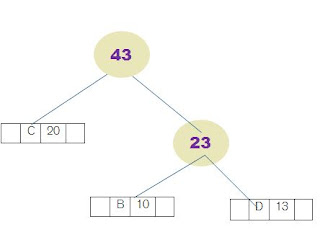

Node 29Step 5: Extract two minimum frequency nodes. Add a new internal node with frequency 20 + 23 = 43

Now min heap contains 3 nodes.

A 28

Node 29

Node 43

A 28

Node 29

Node 43

Step 6: Extract two minimum frequency nodes. Add a new internal node with frequency 28 + 29 = 57

Now min heap contains 2 nodes.

Node 43

Node 57

Step 7: Extract two minimum frequency nodes. Add a new internal node with frequency 43 + 57 = 100

Now min heap contains only one node.

Node 100

Since the heap contains only one node, the algorithm stops here.

Traverse the tree formed starting from the root. Maintain an auxiliary array. While moving to the left child, write 0 to the array. While moving to the right child, write 1 to the array

The codes are as follows:

Average number of bits needed for each character: Node 43

Node 57

Step 7: Extract two minimum frequency nodes. Add a new internal node with frequency 43 + 57 = 100

Node 100

Since the heap contains only one node, the algorithm stops here.

Traverse the tree formed starting from the root. Maintain an auxiliary array. While moving to the left child, write 0 to the array. While moving to the right child, write 1 to the array

The codes are as follows:

C 0

B 10

D 11

A 10

E 1100

G 1101

F 111

Entropy:

2.6282 average number of bits are required to represent each symbol.

Question: Perform run length encoding for the following and calculate compression ratio AC0000AAAAB00000000A0AAA00000.

Ans) Run length encoding

Before Encoding : AC0000AAAAB00000000A0AAA00000

After Encoding : 1A1C404A1B801A103A50

Compression Ratio = Total number of bits before encoding/Total number of bits after encoding

Compression Ratio = 29/20 = 1.45

No comments:

Post a Comment